Abstract of Multi-Touch Interaction

Multi-touch interaction in this case refers to human interaction with a computer where more than one finger can be used to provide input at a time . The benefits of this are that the multi touch interaction is very natural, and it inherently provides support for simultaneous multi user input. There are several promising technologies that are being developed for multi-touch. The two main alternatives currently are capacitive sensing and Frustrated Total Internal Reflection (FTIR). Both of these technologies have been implemented successfully, and both have the possibility of being used in smaller consumer devices such as desktop workstation screens and mobile phones. Multi-touch screens present new possibilities for interaction. Gesture systems based on multi-touch have been developed, as well as multi point systems. These systems have not been comprehensively tested on users.While the idea of multi-touch has been around for many years, recent implementations offer a glimpse of how it could soon become much more common in day to day life. There is a range of technology being leveraged to support multi-touch, and research into the new forms of user interaction it can offer.

Many technologies have been used in small scale prototype implementations but few have made it into commercial success. Some that have are the Mitsubishi Diamond Touch, Microsoft Surface and the Apple iPhone. These technologies revolve around several current promising techniques.The usability of these screens has not been thoroughly empirically tested, but developers have anecdotal evidence to support their claims. Multi-touch has applications in small group collaboration, military applications, modeling applications, and accessibility for people with disabilities

Multi-touch interaction in this case refers to human interaction with a computer where more than one finger can be used to provide input at a time . The benefits of this are that the multi touch interaction is very natural, and it inherently provides support for simultaneous multi user input. There are several promising technologies that are being developed for multi-touch. The two main alternatives currently are capacitive sensing and Frustrated Total Internal Reflection (FTIR). Both of these technologies have been implemented successfully, and both have the possibility of being used in smaller consumer devices such as desktop workstation screens and mobile phones. Multi-touch screens present new possibilities for interaction. Gesture systems based on multi-touch have been developed, as well as multi point systems. These systems have not been comprehensively tested on users.While the idea of multi-touch has been around for many years, recent implementations offer a glimpse of how it could soon become much more common in day to day life. There is a range of technology being leveraged to support multi-touch, and research into the new forms of user interaction it can offer.

Many technologies have been used in small scale prototype implementations but few have made it into commercial success. Some that have are the Mitsubishi Diamond Touch, Microsoft Surface and the Apple iPhone. These technologies revolve around several current promising techniques.The usability of these screens has not been thoroughly empirically tested, but developers have anecdotal evidence to support their claims. Multi-touch has applications in small group collaboration, military applications, modeling applications, and accessibility for people with disabilities

Hand Tracking

It is augmented that the FTIR method to allow the touches of multiple users to be tracked and distinguished from each other. The augmentation also allows more accurate grouping of touches, so that multiple touches made by one person can be grouped to create arbitrarily complex gestures. This augmentation is achieved by tracking the hands of individual users using an overhead camera. This also addresses a criticism raised in [3], relating to the effect of other light sources on the accuracy of the touch recognition. Infrared light from the surrounding environment may cause a touch to be detected in error. The addition of the camera image as a second reference makes this incorrect identification of touches less likely, as detected light emissions can be cross checked with the position of hands before registering as a touch. This paper suggests two methods for discriminating between users' hands. The first is using skin colour segmentation. Previous research had shown that the intensity of skin colour is more important in distinguishing between people than the colour, so polarized lenses are used to remove the background image, and the intensity of the remaining image is used to distinguish users. The drawback is that the users' must wear short sleeved shirts to ensure their skin is visible to the camera.

The other method, used by the authors, is using RGB images from the overhead camera to generate an image of a user's hand by the shape of the shadowed areas. The drawback of this approach is that the image on the table surface cannot significantly change in brightness or intensity over the time of use, or the reference image that is removed from the camera image will no longer be valid. For both methods, a 'finger end' and 'table end' of a user's arm are identified by the narrowing of the arm at the extremities. A user is identified partly by which side of the table they are on. This allows the assigning of a unique identifier to each user, so that their touches can be interpreted correctly. A complex event generation method is presented, which allows user touches to be fired to many listeners, and be recorded in a user history. The benefits of tracking the touches and associating them with a user are that touches can be recorded in history and can be linked together to create gestures. It also increases the scope for accurate multi user interaction.

Touch Detection with Overhead Cameras:

This paper advocates a different approach to multi-touch interaction that does not use FTIR. It uses 2 overhead cameras track the positions of user’s fingers and to detect touches. The major problem with existing camera based systems is that they lack the ability to discern between a touch and a near touch. This places limits on the way a user can interact with the surface.

This paper provides a novel algorithm, developed by the authors to overcome this problem. This algorithm uses a geometric model of the finger and complex interpolation, which allows an accuracy of touch detection of around 98.48% .The algorithm relies on 'machine learning' methods, where the algorithm is 'trained' by running it over many images of users hands on or near the surface. The focus here is giving non multi-touch enabled surfaces or screens the appearance of multi-touch. Unlike in, the aim is not to support multi user multi-touch directly, but multi user support has been implemented by overlaying the image (captured by the overhead cameras) of one users hand onto the workspace of another, using degrees of transparency of the other users hand image to represent height. This means that the problem of determining which touch belongs to which user is circumvented. The use of 2 overhead cameras means that any surface can be used to accept a user’s touch. The authors have used this to provide multi-touch on a tablet PC.

Interaction Techniques

The development of technology to support multi-touch screens has been matched with the development of multitouch and gesture based methods of interaction. There has been work done on gesture recognition for those with physical disabilities, as often gestures normally require a full range of hand function and are difficult to perform for those with limited range in their fingers or wrists. People with disabilities such as these are often still able to use parts of their palm or the lower side of the hand to gesture. These gestures are command based, and gesture 'a' for example could be interpreted to mean go up a level in a directory structure, and 'b' to go forward in a web page. These gestures can be easily performed by users with limited hand function and this was shown through an experiment.

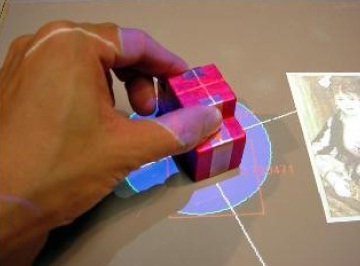

This is an example of using objects to interact with a capacitive touch screen. The block on the screen in Figure 7 itself does not trigger a touch event, as it is not conducting electricity. When the user touches the block a capacitive connection is created and the object registers as a touch. Objects can be uniquely identified and registered with the system, through a kind of 'barcode' system, allowing things such as using objects as commands. A particular object could be moved on the screen, indicating that data should be transferred from one place to another.

The mouse is a common form of input device. A problem with the mouse is that it does not represent how we manipulate things in real life. We often touch multiple points on an object's surface in order to manipulate it such as rotating it. This mean that in using a mouse we must reduce some tasks into simpler steps which may slow us down. Multi-touch allows a more subtle form of interaction that is more natural.

No comments:

Post a Comment